Across Indian process manufacturing, most digital initiatives are focused on the OT data (time-series data) from the sensors, DCS, PLC/SCADA, and historians.These initiatives move faster. The value is immediately visible. Many organisations are already running ML/AI use cases on top of OT data — anomaly detection, equipment health monitoring, early failure warning, etc.

The challenge is that many organisations remain confined to this approach for too long.

OT data provides a snapshot of what is happening now at the machine and physical process level. It lacks the broader context required for complex business trade-offs. Without integrating multi-stream data, these initiatives often result in a plant-level augmented HMI. The dashboard looks better, but still leaves decision-makers with significant blind spots.

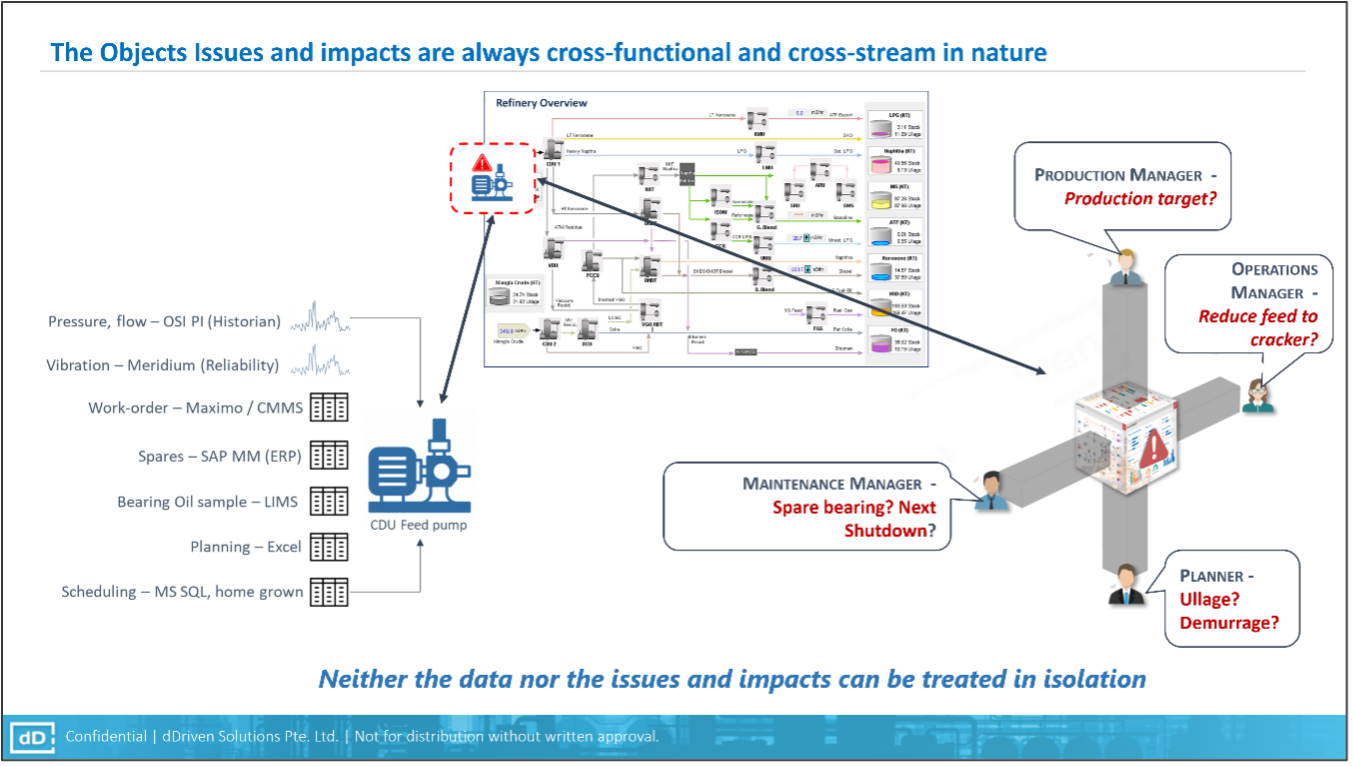

In a typical plant, the impact of a single physical object, such as a CDU Feed pump in a Petroleum Refinery, is never isolated. As shown in the infographic above, understanding the (potential) impact and mitigation/minimization thereof requires a holistic approach. Even if one wants to deal with just the pump in isolation, integration of multiple data-stream are required.

When analytics is performed in isolation using only OT data, the organization loses situational awareness. A maintenance manager might see a vibration alert, but they cannot see whether a spare bearing is available, how a shutdown would impact the current production target, or whether demurrage risks are accumulating at the port.

During periods of growth, the risks become magnified. Decision-making does not scale at the same pace as physical capacity. Utilisation ramps slowly. Inventory buffers rise fast. Working capital stretches. The returns on capital remain structurally sub-optimal. By the time decision-making catches up, the operating reality has already changed — new products, new constraints, new commitments.

Most organisations recognise this gap. It persists because of structural reasons. Extending analytics beyond OT data introduces immediate cost: multiple data owners, governance issues, integration effort, and accumulated technical and organisational debt. The effort (and risk) of crossing this boundary is borne upfront, while the benefits accrue gradually and diffuse across functions.

As a result, organisations make a rational choice. They continue to stack dashboards, models, and logic on top of the OT layer. More data is produced, more analytics is deployed. Critical decisions still depend on experience and informal coordination. The organisation becomes data-rich, yet remains context-poor.

.png)

True data-driven insight requires more than just stacking models on top of the OT layer. It requires an enterprise data foundation that captures the interconnectivity between physical objects (pumps, heat exchangers, compressors) and non-physical objects (plans, schedules, work orders, purchase requisitions).

A well-functioning data foundation must continuously ingest data from all the existing & future systems (IT-OT systems, spreadsheets, documents, etc.), organise it into reusable structures, and make this context available across teams. It should evolve as new use-cases emerge, without repeated integration effort or point solutions. When this flexibility is missing, maintaining the data foundation becomes a burden.

The screenshot (Figure 2) illustrates what becomes possible with an integrated view of a gas turbine and generator skid. In an OT-only setup, a maintenance lead sees a vibration spike on the turbine bearing. They must make phone calls, check spreadsheets, and rely on memory to understand the implications. With an integrated foundation, that same alert arrives with additional context: no spare bearing is currently in stock; a vessel is due for berthing in 36 hours; a planned shutdown next week could absorb this repair without production loss; the procurement lead has already flagged a supplier delay.

The path forward is incremental. Start with one plant which is underperforming. Establish the data foundation for that scope, integrating cross-functional streams. Then extend it gradually. By unifying disparate data streams, organizations can transform their analytics from a local optimization tool into a strategic engine that maximizes returns on deployed capital.